AI Risks for Business from Hallucinations to Data Leaks: No Need to Panic

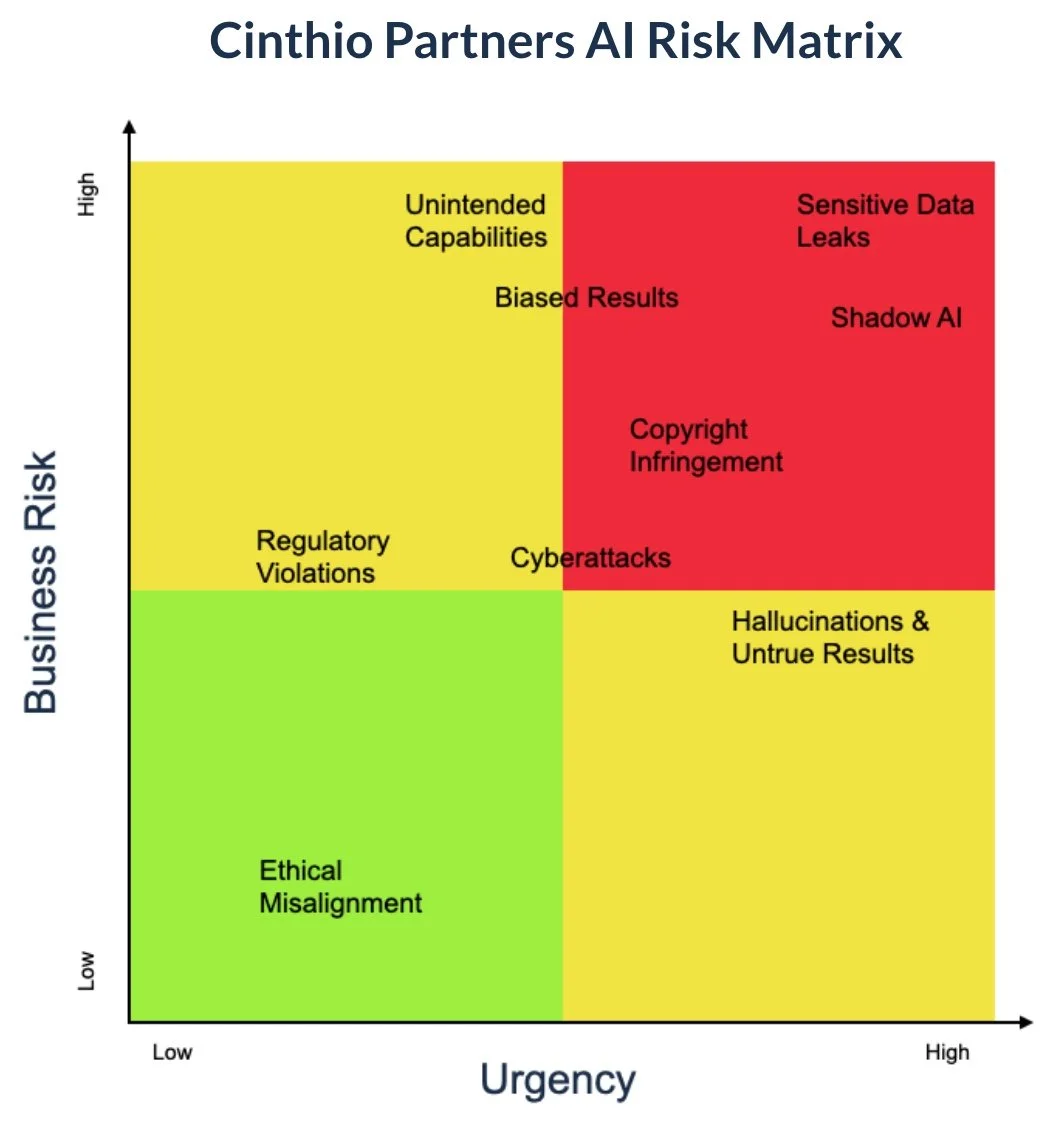

Many corporations don’t have their arms around key risks emerging from AI. There is no need to panic. The Cinthio Partners AI Risk Matrix is designed to help business leaders understand new risks and prioritize their action plan.

Ignore debates on the potential “existential” threats of AI for now - those discussions are interesting and perhaps important, but they will not help your business. Focus on practical risks and challenges that are already here.

“Business Risk” is an obvious vector; we use “Urgency” as the second dimension because our research indicates only 23% of CXOs know the risks which may have already penetrated their business and the rate those risks currently occur.

Sensitive Data Leaks:

11% of the data pasted into ChatGPT at work is confidential! Team members may unknowingly use a malicious plug-in or an AI tool which captures critical data. We’ve already seen the repercussions with Samsung. The key here is to move fast in establishing ground rules for your team.

Don’t overthink it - pull together guidelines and educate your teams on the risks, along with the consequences.

Shadow AI

This refers to AI applications developed or procured outside of official channels in an organization. These can pose significant risks in terms of data privacy and security.

Biased Results

AI is only as good as the data it's trained on. If the data has biases, the AI will learn and replicate these biases, leading to skewed results that could affect decision-making or customer interactions.

We’re not talking about protected classes here, although those can be greatly impacted by AI Bias.

For example, an AI system that is trained on data from people who live in cities may assume that everyone lives in a city. This could lead the AI system to recommend products or services that are only available in cities, or to provide inaccurate information about rural areas.

AI can hallucinate, creating answers that are essentially convincing lies. They’re called “hallucinations” because to call these answers “lies” implies intent to deceive, but AI tools aren’t trying to trick you.

Hallucinations

AI systems, including chatbots, might generate responses or data that don't align with reality. This 'hallucination' could lead to misinformation or miscommunication, impacting the business operations.

This may sound goofy, but it has real world implications.

Copyright Infringement

AI's ability to generate content could potentially lead to copyright issues, especially if it inadvertently replicates protected material.

Cyberattacks

With AI becoming a crucial part of business operations, the risk of cyberattacks increases. Hackers could target AI systems, leading to data breaches or disruptions in service.

Unintended Capabilities

AI systems might develop capabilities beyond their intended scope due to the learning nature of the technology. This could lead to unexpected consequences, some of which might not be desirable for the business.

Regulatory Violations

As laws struggle to keep up with the rapid pace of AI development, businesses could inadvertently violate regulations related to data privacy, fair use, and more.

Ethical Misalignment

AI systems could act in ways that do not align with societal or individual ethics, raising concerns about transparency, fairness, and accountability.